Review Metrics

Reporting on reviews give you visibility into feedback that you and your agents receive for conversations they have engaged with. Reviews cover both human and AI feedback.

Before You Start

This article covers details of creating metrics on top of the Logical Model. It is useful to have understanding of these areas:

- Logical Model helps you understand how reviews relate to the rest of the data in Salted CX.

- Review data set provides details what attributes and facts are available fro individual reviews.

- Custom metrics cover basics for how to write a custom metric within Salted CX.

- Metrics reference contains list of built-in metrics that you might consider using without having to write your own, or metric you can reuse in your own metrics.

Review Type and Status

Ensure that you filter metrics to the review type you are interested in. You can combine Auto Reviews with Manual Reviews for example but you should know why you are doing it.

| Type | Description |

|---|---|

| Agent | Review provided by the agent on their engagements. This type of review enables you to gather the agent's perspective. |

| Auto | The review was provided by an automated service. |

| Customer | The review was provided by the customer. This is typical for customer journeys. |

| Reviewer | The review was provided by a person responsible for quality assurance in the company. This person is different from the agent who handled that engagement. |

Review have status in Salted CX to enable reporting for planned reviews, customer surveys without answers, cases in which a reviewer rejected to review, etc. Use sattuses in metric definitions to ensure only the expected reviews are counted.

| Status | Description |

|---|---|

| Completed | The review is completed and it can be included in the reports. |

| Deleted | The review was deleted and should not be in the results. |

| Ignore | The review is not relevant. |

| Pending | The review is scheduled but the reviewer has not answered yet. This may happen in case you have planned reviews that reviewers should do and you want to report on those that are yet to be done. |

| Rejected | The reviewer rejected to review the engagement. This may happen in case that the reviewer concludes that the engagement is not a good representative sample to review. |

| Timeout | The review was requested but the reviewer has not provided it. This may happen when reviewers did not have time to review or for customer reviews the customers simply choose not to answer. |

Example of a custom metric that counts number of engagements that have manual or auto reviews that are completed. The reviews have to be to the question “Knowledge Gap” and “Inaccurate Information”.

SELECT COUNT(Engagement)

WHERE Review Type IN ("Auto", "Reviewer")

AND Review Status IN ("Completed")

AND Question Name IN ("Knowledge Gap", "Innacurate Information")

Answer Score versus Score

Each Review in Salted CX has two scores:

- Answer Score — The score in the original rating scale. Use this only when you know that all questions in a metric use the same rating scale.

- Score — The Answer Score normalized to percentages in the range from 0% to 100%. This enables you to include a score from multiple questions with different rating scales into a single metric.

Examples of Answer Score versus Score for selected question types:

| Answer | Answer Score | Score | |

|---|---|---|---|

| NPS (0 to 10) | Detractor | 0 | 0% |

| NPS (0 to 10) | Detractor | 1 | 10% |

| NPS (0 to 10) | Passive | 7 | 70% |

| NPS (0 to 10) | Promoter | 10 | 100% |

| Five Stars (1 to 5) | ★☆☆☆☆ | 1 | 0% |

| Five Stars (1 to 5) | ★★★☆☆ | 3 | 50% |

| Five Stars (1 to 5) | ★★★★★ | 5 | 100% |

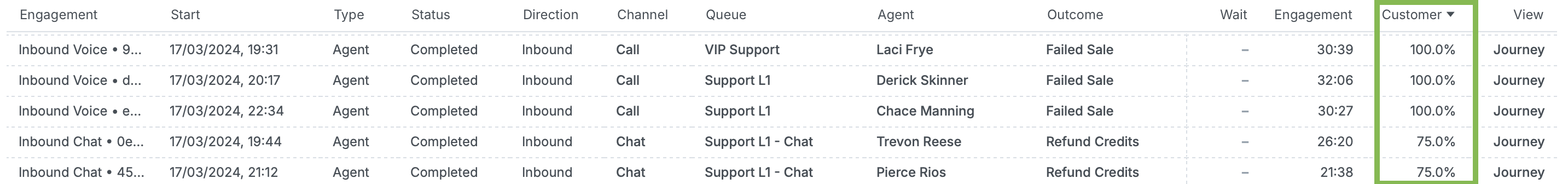

Engagement Level Review Results

In some reports, you might want to show the review score in a table on the same line as the engagement without having each review on a separate line. See the table below as an example:

As there might be multiple reviews on a single engagement (even for the same Question by different reviewers), it is not possible to represent it by a single number without telling Salted CX how to reduce potential multiple reviews into a single number. You need to decide what aggregation function you want to use and either use a built-in metric or create your own.

SELECT MIN(Score) WHERE

Question IN ("How would you rate the agent?")

AND Review Status IN ("Completed")

| Usage | |

|---|---|

| Minimum | Use the minimum MIN aggregation function to highlight potential issues that need attention. This is typically what you would like to do. |

| Average | Use the average AVG aggregation to get a “fair” assessment of an engagement. Always make sure you include only reviews you really want so for example negative feedback from customers is not masked by very positive feedback from agents and reviewers. |

| Median | Median has limited use at the engagement level. The median is typically useful on larger data samples rather than a few reviews associated with a single engagement. |

| Maximum | Use the maximum MAX aggregation to highlight exceptional performance. Always make sure you include only reviews you really want so for example negative feedback from customers is not masked by very positive feedback from agents and reviewers. Most dashboards and visualizations are unlikely to use maximum aggregation as it is intended for specific use cases. |

If you do not filter a metric, a visualization or a dashboard to a specific Question that has responses on the same rating scale strongly consider using Score fact. Score fact normalizes different rating scales to range between 0% and 100%. If the questions have different rating scale the average number is not an actionable figure.