Quality Assurance

Salted CX focuses on an agile review process that prioritizes the maximum amount of feedback for agents with as short a feedback cycle as possible. Additionally, Salted CX can use the collected feedback from people to train AI which then enhances the quality assurance process.

Even if quality assurance is done automatically by AI human feedback is necessary to provide training data for the AI and ensure that the results provided by AI are aligned with the business expectations.

More Reviews that Matter

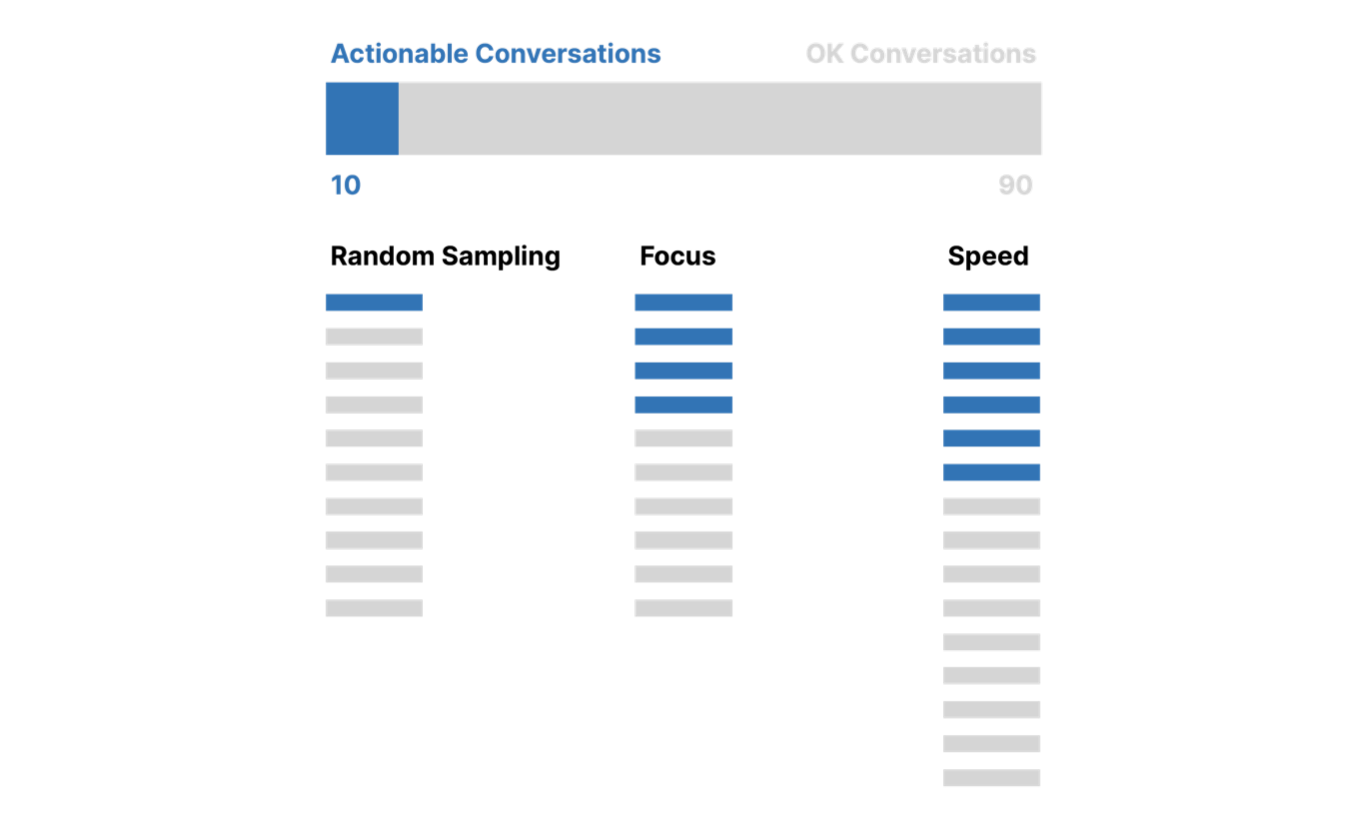

To get the most value from the time and effort you invest in reviews you have two high-level options:

- Focus on more interesting conversations. Reviewing these conversations is more likely to lead to actionable findings. You do this by selecting some criteria detected from metadata and content.

- Review more conversations. Spend less time on an individual conversation to maximize conversations reviewed per unit of time.

The exact ratio between a number of actionable findings between the random sample reviews and reviews on focused and fast reviews depends on multiple factors:

- The percentage of actionable conversations out of total volume.

The table below contains the comparison of random sampling and focused reviews:

| Opportunity Discovery Approach | Random Sample Legacy Quality Assurance | |

|---|---|---|

| Primary Goals | Discover opportunities how to improve key business metrics often with collaboration by other departments in the company. | Provide agents with a fair assessment of their performance that can be used to decide for calculating compensation and even make career decisions. Provide performance reports to a 3rd party or higher management to monitor how the contact center is doing. |

| Integration with AI | Quality Assurance also annotates individual turns for training AI models. | Hard to use for training AI models as most of the reviewed content is not actionable and most feedback is engagement-wide which means that the AI model does not know what exactly caused the feedback. This gets worse for longer engagements. |

| Main Advantages | Focuses on resolving issues and uncovering opportunities that have the highest impact on the company's performance. A high ratio of reviewed conversations contains interesting moments you can act on. | Provides a benchmark value for a performance based on human feedback. |

| Main Disadvantages | Does not provide a fair assessment of agents’ performance. Depending on whether you focus on problems or highlights it can create a very skewed perception of performance. Requires constant changes to reflect the changing business goals and external factors. | Low number of reviewed conversations. A low percentage of actionable findings out of reviewed conversations (as in a well-managed contact center most conversations are OK). Sometimes it is hard to keep the customer perspective aligned with the watched quality score (for example quality scores are high while customer satisfaction is low). |

| Percentage of manually reviewed conversations | Typically around 5%. Depends on specific use case. | Typically around 1%. Depends on the duration/length of the conversations and complexity of the form used for reviews. |

| Choosing Conversations for Review | Conversations that are outliers in key metrics including but are not limited to customer satisfaction, engagement time, wrap-up time, etc. Conversations that were automatically reviewed by Salted CX AI and were tagged with a tag that indicates some behavior worth somebody’s attention. Conversations that contain specific content based on ad hoc Semantic Search. | Random sample. |

| Manual review process | Read through conversations in Customer Journey and use feedback forms to annotate turns and engagements whenever you find something worth attention. | Answer questions in a form by browsing a skimming conversations over and over. |

| Steps after the manual review | All the results are visible in dashboards within the next 15 minutes automatically. People who requested the review can take action on those review results. | Sharing quality score with management to keep track of the key quality identifiers. |

Reviewing Conversations

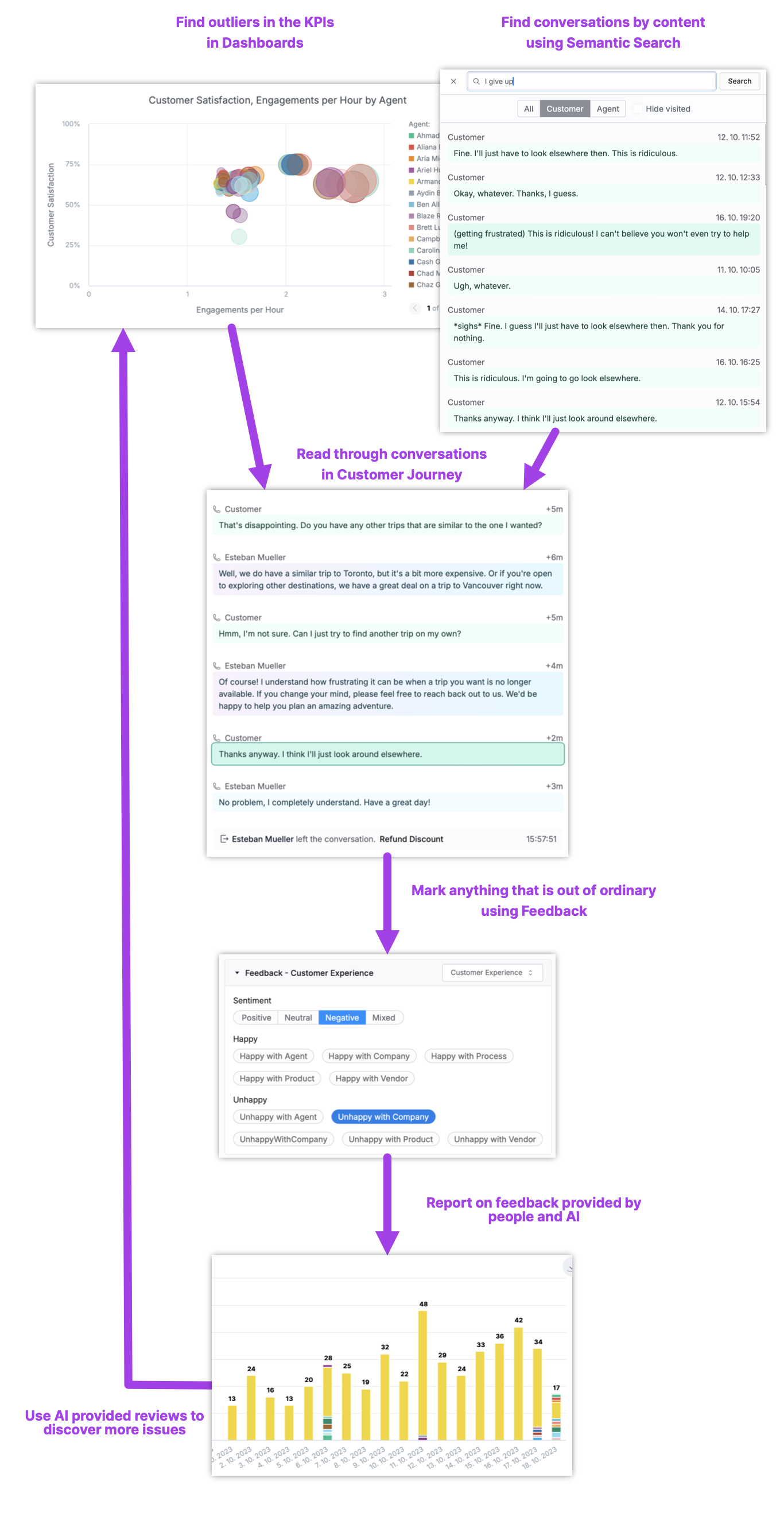

Users review conversations based on findings.

How to Manually Review Turns and Engagements

After you drilled down to a customer journey.

-

Navigate to the part of the customer journey that is relevant for your review. Drill-down from a dashboard often leads to a specific conversation or engagement. Discovery using Semantic Search leads to a specific turn. You can use the summary on the left side to focus on conversations happening in a time frame you are interested in. You do not often need to review a complete customer journey.

-

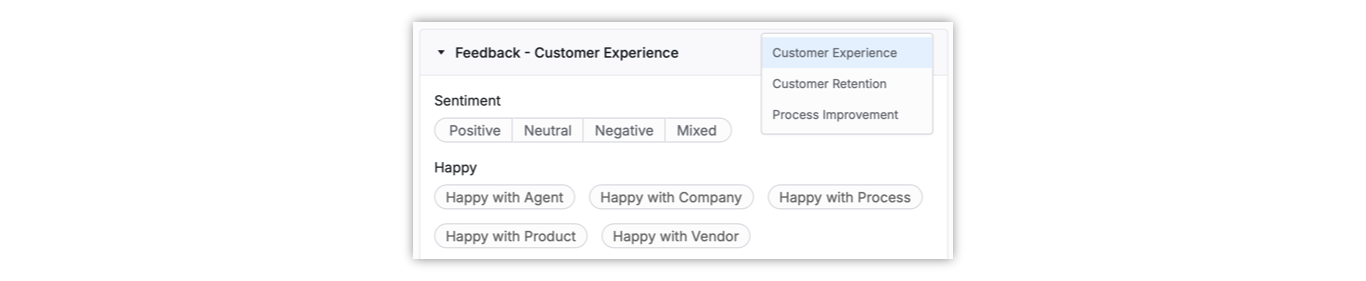

Read through the part of the customer journey relevant for your review. Whenever you find anything worth your attention. Use the Feedback panel on the right to tag it with a given behavior. Choose the best form for your review. You can use different forms for customer, agent, and bot turns and Salted CX remembers the choice for individual turns separately.

-

You can use the Reviewed tag to mark the engagement as completely reviewed at the end.

Useful tags for general use:

Bookmark — marks the current turn or conversation for later use. So it is easy to discover.

Reviewed — marks entire engagement as reviewed in reports, counts toward coverage of agent engagements that were reviewed. Use this tag to show that you went through the entire engagement to make sure nothing important slips.

See the full list of built-in tags and questions.

Tags and Questions for Your Business

Salted CX provides a rich set of built-in tags and questions that try to cover common use cases. These provide a great starting point.

Contact Salted CX to create tags and questions for you. This step cannot currently be done directly in the application.

Auto Reviews

Auto reviews are similar to reviews people can provide in our Customer Journey using the feedback pane. However, auto reviews are provided by AI. They can also be associated with a specific turn

Auto reviews analyze 100% of conversations and attach reviews to them. These reviews are easy to distinguish from reviews provided by people. You can report on them and you can see them in our Customer Journey.

How are auto-reviews useful:

- Watch the number of conversations that contain certain behaviors over time. Making sure the number grows (for positive tags) or declines (for negative tags).

- Identify agents, teams, or other clusters of conversations that contain unwanted behavior to have a look at the root cause.

- List conversations that have tags or a combination of tags for review by a person. For example, you might use auto reviews to find places where customers are unhappy and you want to walk through those conversations to learn what are the reasons and categorize those.

Contact Salted CX to start auto-reviewing using a specific tag. This step cannot currently be done directly in the application.

The accuracy of auto reviews depends on the use case and the amount and clarity of the manual reviews. Every AI model requires training input that is as accurate as possible.