AI-Powered Quality Assurance

You can use AI to review 100% of conversations with the customers automatically. The AI works with different accuracy for different cases and it is not usable in every scenario. You can use AI to provide automatic reviews of individual turns in conversations.

How AI Works

In general, AI takes an input and produces an output based on training examples. In Salted CX the input is conversation content - transcripts and individual messages from a customer journey. The output is automatic reviews as if they were done by a person. The training data that the AI needs are manual reviews provided by people reviewing conversations.

Training Data

Although AI has typically a basic understanding of language it does not understand your business. For AI to understand your business AI needs to have training data. This means the AI needs example human reviews of your conversations. The number of these examples depends on the case you are trying to use AI for.

Data Annotation

Data annotation is a process during which people create training data for AI. People review data (for example conversation content) and annotate it. The AI then uses the annotated data to mimic the people's decisions. In Salted CX the data annotation is hidden behind manual reviews. This means that manual reviews not only provide feedback to agents but also serve to build a repository of training data for the AI.

Manual Reviews for Training AI

To give AI training examples for doing automatic reviews you need to provide example human reviews. The number of reviews depends on the specific case. Some behaviors are easier to identify than others.

Turn-Level Reviews

Turn-level reviews are essential for providing AI with very targeted training data. Reviews on individual turns help AI understand what in an entire conversation was the most significant for the given review.

Engagement-wide reviews that are common for legacy quality assurance do not point to the exact moment in a conversation which makes it significantly more difficult for AI to understand what content in the conversation is the main contributor to the searched phenomenon. Legacy quality assurance would lead to significantly lower accuracy and the need to provide orders of magnitude more engagements.

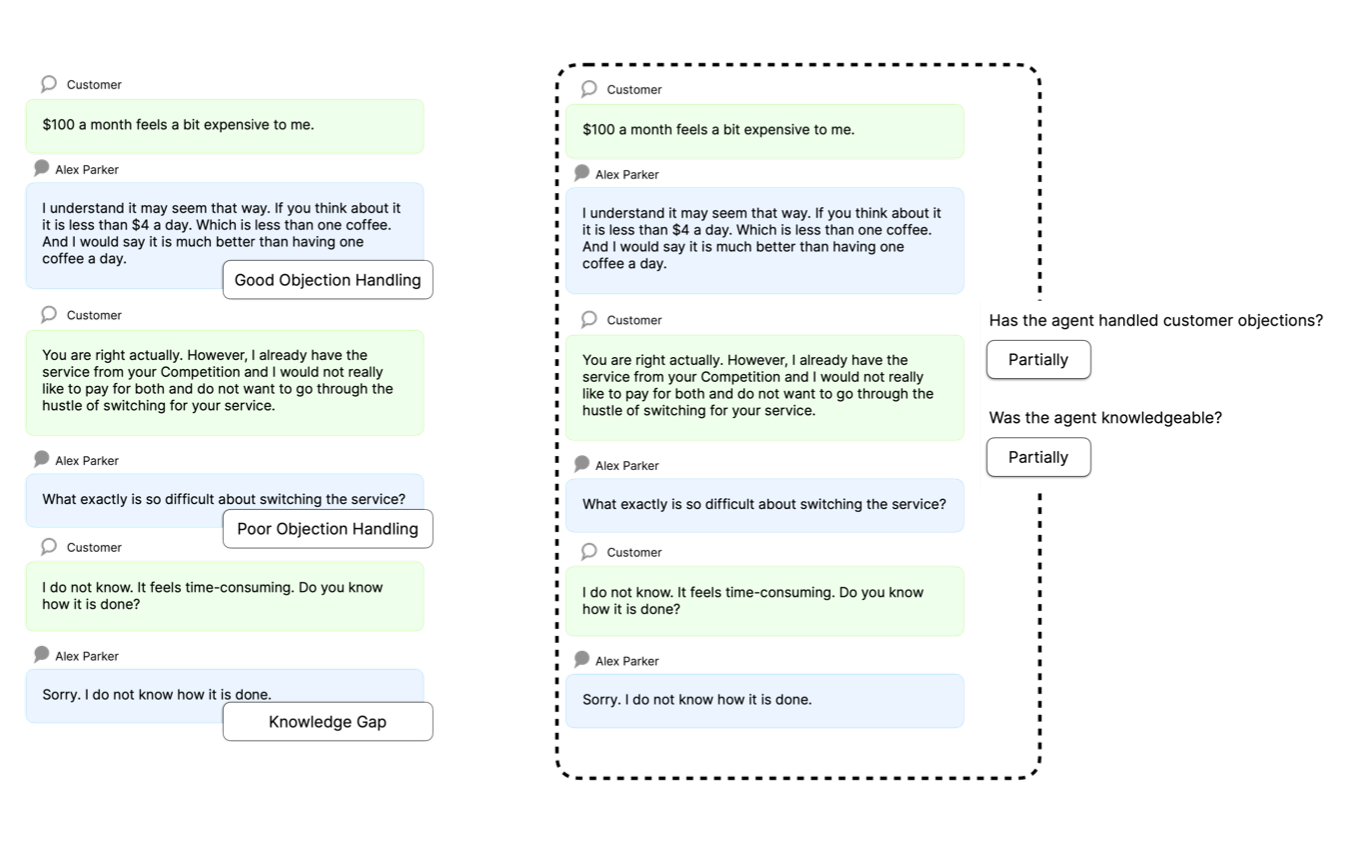

In the example above you see a comparison of turn-level tags with engagement level quality assurance questions. You can notice that the snippet from a conversation contains examples of both good objection handling and poor objection handling. Turn-level tags enable AI to exactly understand what is perceived as a good example or a bad example.

With engagement-level questions, the totally opposite behaviors are not distinguishable from each other. Additionally, AI has to use the entire conversation content that can contain unrelated conversation topics as a training example.

To sum it up engagement-wide questions used in legacy quality assurance processes have these issues:

- Noise from unrelated parts of a conversation. If the behavior manifests only in a subset of the conversation the rest of the conversation adds noise to the training as AI has to learn to ignore the unrelated content. This requires significantly more examples — more manual reviews. Also even with more examples, the accuracy will be lower in most cases.

- Engagement can contain good and bad behavior. In his case, the engagement cannot be used for AI training at all. Such examples would lead to non-actionable findings and reduced accuracy.

Tags and Questions

You need to provide training manual reviews for every tag or question you want to use AI for. You can create your custom tags and questions for AI-powered auto reviews.

When you create tags and questions for AI-powered quality assurance these tags and questions should be individually actionable:

- Unwanted behavior. The behavior of either an agent or a customer that represents a situation that is not welcome in your business. You might want to address these either by talking to the agent or changing processes and policies.

- Exceptionally good behavior. The behavior that might be used as a good example is when you exceed customer expectations thanks to your products, services, processes, or agents. This should not include a baseline expected performance from the agent. Such behavior should be considered a default and should not require attention.

- Unexpected behavior. The behavior that is new or unexpected and you may want to check how common it is and whether it is necessary to adapt your processes and train agents for it.

Behavior that is not worth noticing and represents an expected customer experience is not worth tagging in most cases. We recommend spending the time as efficiently as possible and focusing on behavior you can act on later on — you can tell that you need to talk to an agent, change something in your business process, or fix something in a product.

Check built-in tags and questions.

How to Review Conversations

To review a conversation in an AI-friendly manner we strongly encourage you to follow our recommendations in the Quality Assurance article. Especially focusing on these:

- Read a conversation in chronological order. This gives you an understanding of the context and helps you to relive the customer experience.

- When you encounter an unwanted behavior, exceptionally good behavior or an unexpected behavior tag it. You can use one of the built-in tags, create your own, or if the behavior is something new use the generic Bookmark tag to return to it later.

- Wrap up your review session by considering whether you want dedicated tags for a new behavior you have encountered. You might also consider adding more granularity to existing tags to distinguish between behaviors you want to report on separately.

Process Improvements

Companies evolve and externalities change. This leads to discoveries in conversations on an ongoing basis.

- Keep your tags and questions up to date. When you encounter a new behavior that is worth watching create a tag for it. Also, remove tags for behaviors that no longer appear in the conversations.

- Add tags and questions to your forms after review sessions. If you could not tag a behavior from the form you had at hand during the review session consider adding the tag to your form to avoid switching between different forms. This saves you some valuable time. Always try to balance the length of the form with its usefulness for your reviews. Longer forms may be harder to navigate and slow down some reviews.